Vitalik Buterin’s In-Depth View: AI Should Focus on Enhancing Human Capabilities, Not Full Autonomy

Image source: https://x.com/VitalikButerin

Background of the Latest Perspective

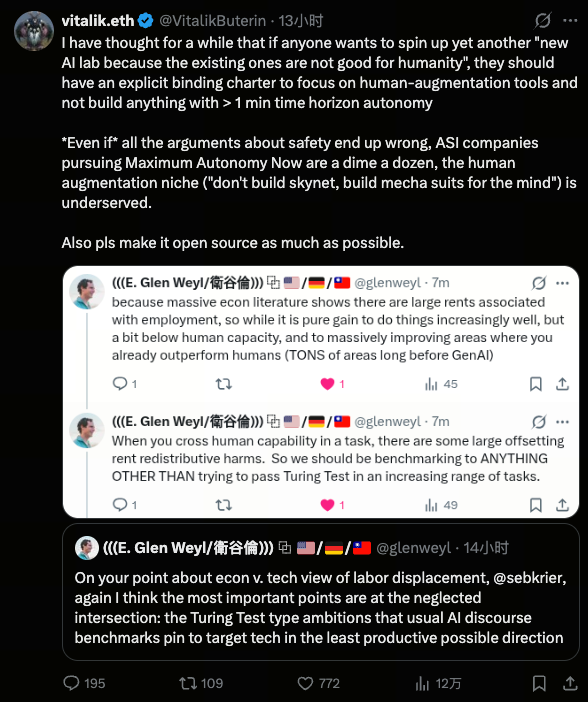

Recent reports indicate that Ethereum co-founder Vitalik Buterin asserts that the future of AI should center on empowering human capabilities rather than pursuing fully autonomous superintelligent (ASI) systems. Through social media and public commentary, he emphasizes that emerging AI labs must establish clear charters, prioritizing human-machine collaboration and the development of augmentation tools. He further recommends avoiding the creation of systems capable of more than one minute of autonomous operation.

This perspective emerges amid intensifying global debate over AI safety, autonomy risks, and regulatory frameworks. As general AI (AGI) and superintelligent AI (ASI) increasingly dominate public discourse, questions about AI replacing human decision-making and exceeding human control have become central topics in both the technology sector and mainstream society.

Vitalik’s Core Arguments

Vitalik’s position centers on two key points:

- AI should focus on human augmentation—a direction he believes is genuinely beneficial to society and remains underdeveloped;

- Development of systems with prolonged autonomous decision-making should be avoided. He suggests imposing a limit, such as restricting autonomous operation to no more than one minute, to ensure humans always retain control over AI’s behavioral boundaries.

He notes that while many ASI companies currently pursue high levels of autonomy, AI tools designed to assist human reasoning, enhance productivity, and drive social progress are still scarce. Therefore, he advocates for technical research and societal attention to shift toward areas that strengthen human cognition and capabilities.

AI Autonomy vs. Human Augmentation

Fully autonomous AI generally refers to systems that operate outside of immediate human control, making decisions and executing actions independently. These systems possess significant complexity and potential but also introduce ethical, safety, and loss-of-control risks.

By contrast, AI designed for human augmentation focuses on supporting human thought, decision-making, and physical tasks—for example, improving information processing, assisting creative work, and optimizing complex system analysis. These tools have well-defined usage boundaries and depend on human input and supervision, integrating human-machine collaboration into real-world workflows.

The Future Value of Human-Machine Collaboration

Vitalik’s perspective is not a blanket rejection of AI autonomy; rather, it redefines the boundaries of AI’s value. He stresses that AI delivers maximum societal benefit when it enhances human abilities instead of replacing human decision-making, thereby improving overall efficiency, safety, and ethical standards.

In the decades ahead, human-AI collaboration may become the cornerstone of productivity transformation and a vital pathway for balancing social stability with technological ethics. This model positions AI as a tool for augmentation rather than an independent agent, helping prevent decision-making from escaping human oversight.

Challenges of Balancing Technology and Ethics

When considering the direction of AI development, ethical standards and technical constraints are equally critical. For instance, it is essential to ensure AI systems are sufficiently capable for complex tasks while implementing robust “constraints” to prevent misuse, abuse, or loss of control. This involves algorithmic transparency, fostering an open-source culture, and establishing mechanisms for social oversight.

Vitalik advocates for open-source AI projects to increase transparency in development processes and algorithmic logic. This approach enables collaboration among developers from diverse backgrounds and oversight institutions, helping to mitigate monopoly risks and security concerns associated with closed development, and promoting the healthy evolution of technology.

Implications for Industry Development

Vitalik’s insights offer valuable guidance for the AI industry:

- Encourage more companies to invest in the development of human augmentation tools instead of exclusively pursuing extreme autonomy.

- Emphasize the establishment of ethical guidelines so that AI advances efficiency while remaining safe and controllable.

- Promote open-source culture to make AI technology more transparent, facilitating social oversight and broad participation.

This approach aligns with the views of leading AI safety researchers, who caution that excessive pursuit of autonomy can increase uncertainty and risk, while assistive AI enhances human control and engagement in decision-making.

Conclusion and Future Trends

In summary, Vitalik Buterin’s reflections on AI extend beyond technical evaluation and demonstrate his deep concern for the social impact of AI and the future of humanity. As technology advances and AI capabilities continue to grow, maintaining human primacy and ensuring technology serves humanity are challenges that the global technology community and society must collectively address.

Looking ahead, AI development is likely to focus more on the co-evolution of humans and intelligent systems. This trend may become a key driver for transformation across technology, education, governance, and economic structures.

Related Articles

2025 BTC Price Prediction: BTC Trend Forecast Based on Technical and Macroeconomic Data

Flare Crypto Explained: What Is Flare Network and Why It Matters in 2025

How to Use a Crypto Whale Tracker: Top Tool Recommendation for 2025 to Follow Whale Moves

Pi Coin Transaction Guide: How to Transfer to Gate.com

What is N2: An AI-Driven Layer 2 Solution